Hello and welcome to an amazing article where we will discuss many things related to the word 2 vectors. In our previous blog, we have covered different techniques for text representation like bag or words, TfIDF, etc. All these techniques collectively are known as word embedding.

Table of Contents

What is Word Embeddings?

In NLP word embedding is a technique in which a word is converted or represented in the form of a vector using some mathematical transformation method that encodes the meaning of a word such that words that are closer in vector space are expected to be of similar meaning.

If we talk about types of word embedding techniques then there are two types of word embedding techniques.

1) Frequency-based word embedding techniques - In these vectors of the word are decided as per the frequency count of a word in the corpus. An example of this technique is TFIDF, a Bag of words that we have studied in previous articles.

2) Prediction-based word embedding techniques - In these, the conversion of word to vector is based on a prediction of some algorithms which are nothing but deep learning neural networks. An example of this is the Word 2 vector which is the topic of study in this article.

What is a Word 2 Vector?

It is a deep learning-based word embedding technique whose task is to convert a word into a collection of numbers(vector). Now, why do we require a word to vector? We know that we cannot directly represent a word or feed a word to a model so we need to convert a word into number form. Word to vector model is implemented by Google and you can easily find its published research paper on the web.

Advantages Of Word 2 Vector Over Bag of Words and TFIDF.

- we can find the semantic meaning of words. For example, happy and joy are the most similar words, and the word 2 vector model is capable to understand this relationship.

- The dimension of the vector was very high using old techniques but using a word to vector the resultant vector is in low dimension. In simple words to vector creates a vector of dimension in a range between 100 to 300 so computation speed increases.

- The word to vector creases a dense vector (more non-zero values), not a sparse vector so it also avoids the problem of a sparse matrix. Due to the creation of a dense vector, it avoids overfitting that occurs due to sparse matrix in other techniques.

Intuition Behind Word 2 Vector from scratch

We will understand the complete algorithm and how it works from scratch. suppose we want to build our version of the word 2 vectors model. What word two vector does is It creates some dummy features related to input and then solves some dummy problem or finds the vector according to dummy words or input words and assigns a vector to each word.

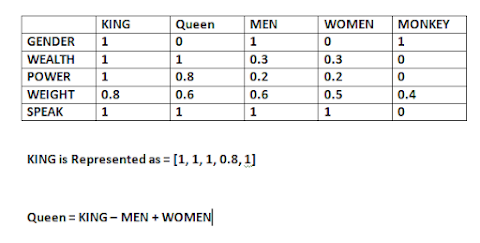

👉 suppose we have below input words shown in the table column and we create some dummy feature according to our understanding and assign it a value (weights) between 0 to 1. So combining all the weights of features for a particular word gives us a vector representation of the word.

This is what happened behind the scenes, but the problem is in the real world, you will have millions of words, and to craft, the word in vector needs large computational power, and now neural networks come into the picture to create these features automatically. But one downfall is that you will never know what is a particular feature that what is gender, or what is wealth. You will only know the value of the feature, not the feature name.

How is Feature Decided in Word 2 Vector Model?

How it is decided what the feature is, so the core logic behind selecting the features is "two words sharing similar context also share similar meaning and consequently a similar vector representation from the model". In simple words, when two words are used to express a similar type of meaning, then their representation will also be similar. For example, assume the below 2 sentences.

The football player took a shot.

the hockey player took a shot.

So here, football and hockey are used in a similar context, and the word 2 vector model will assume both the words as similar sports. This is an overview of selecting the feature. It will be more clarified in the further part of the article.

Types of Word 2 Vector Model

There are two types of architecture of words 2 vector model. One is CBOW and the second is a skip-gram model. Both are deep neural networks, and there is only an architectural difference between both models. If you know a little bit about deep learning and neural networks, then both the models will be pretty clear to you.

1) CBOW (Continuous Bag of Words)

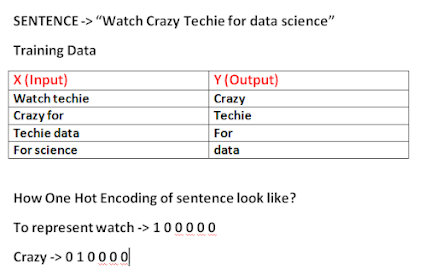

Now we will discuss how word2vec converts any word into a vector. We cannot directly convert any word into a vector, so we take a fake problem and try to solve it, and while solving a fake problem, we get a resultant vector as a by-product by solving a fake problem and finding its output. The conversion of a word into a vector is based on context, so we have to take a dummy problem. Suppose we take a sentence and take a 3 words window. Now we will call a middle word a target word, and the word present before and after the target is known as a context word. So the problem statement will be like Given a context word, your task is to predict the target word.

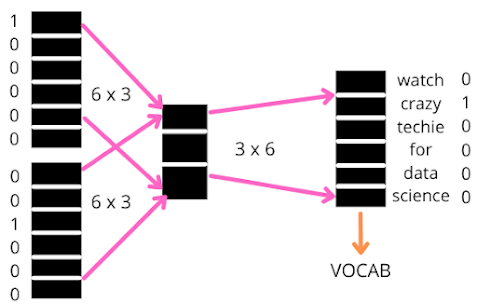

Now we have prepared dummy training data using a window size of 3. Now for this training data, we prepare one fully connected neural network.

- The input layer has 12 neurons means 6 nodes of each word, so we can take the input of 2 words.

- The hidden layer has 3 neurons, and

- the output layer will have 6 nodes.

The compact form of architecture is shown below. Now the normal training of neural networks happens with forwarding and backward propagation, and each time, we calculate loss and optimize it. After training, each word can be represented with 3 weights values and the word is represented with three weights values. It depends on you how many dimension vectors you want. Only you have to understand is how neural network training and implementation are very easy is done by libraries.

2) Skip-Gram Model

The same concept we will study with skip-gram. skip-gram is completely the opposite of CBOW. we have to find a vector representation of work and for that, we take one dummy problem and in this, we reverse the dummy problem. So the problem, in this case, is "Given target word you have to predict the context words". So the architecture will be reversed in this case and we will slide in the same way with one word. We have 6 nodes in input, the same 3 nodes in the hidden layer, and 12 nodes in the output layer (opposite architecture as shown above).

When to use CBOW and Skip-gram Model?

- In different data science researches it is proven that you should use CBOW when you are working on small datasets on which visualizations are easy because the CBOW model is fast and produces accurate results on a small dataset.

- If you are working with large datasets then Skip-gram is best to use because it works solely fine with big datasets.

How You Can Improve the Quality of the Word 2 Vectors?

- If you want to improve the quality of the word 2 vectors then try to increase the training data.

- If you increase the dimension of the vector then also sometimes produces good results.

- Increasing window size also improves the results. But training time increases by increasing the window size.

Training Your Own Word 2 Vector Model

We have studied both the architecture and we have an idea about the working of both models and it is the right time to give hands-on practice to learn how we can apply these models to our dataset. To make hands-on practice interesting I am picking a game of thrones( a popular TV show) dataset from Kaggle.

1) Dataset Overview

You can easily find the dataset on Kaggle or can access it through this link. The dataset contains 5 text files because the dataset is all about the game of thrones series that you have watched on television or the movie and there is a total of 5 books about a game of thrones. So let us move toward the implementation part. I hope that you have downloaded the dataset and kept it in one folder or created a Kaggle notebook for practice.

2) Install Libraries

To load and play with text data certain libraries are required. we need to install Gensim and NLTK python libraries used for text mining and preprocessing in NLP. You can directly install the libraries from your jupyter notebook or the command line. If you are in Kaggle then no need to install any library.

pip install gensim

pip install nltk

3) Load the Data

Now we will load each file and read it and store the content in a corpus. Corpus is a collection of all content. After that, we break down the content into sentences. After that, we have sentences so it is important to do the basic text preprocessing to avoid any kind of unused spaces, punctuations, character case problems, etc.

import os

import gensim

from nltk import sent_tokenize

from gensim.utils import simple_preprocess

data_path = '../input/game-of-thrones-books'

story = [] #store all sentences

for filename in os.listdir(data_path):

filepath = os.path.join(data_path, filename)

#print(filepath)

f = open(filepath, 'rb')

corpus = f.read()

corpus = str(corpus)

raw_sent = sent_tokenize(corpus)

for sent in raw_sent:

story.append(simple_preprocess(sent))

After running the above code the story will have approximately 145000 sentences.

Build Word 2 Vector Model

Now we will build a word 2 vector model which is in-built present in the Gensim python library that accepts two main parameters.

- Window - How many words do you want to take on both sides to predict a target around context words. In the above discussion, we have seen an architecture where it takes 2 words so as our corpus is a bit so we take 10 words on both sides.

- Minimum counts - The words that the model will take so we can assign the minimum frequency of each word to include in training.

model = gensim.models.Word2Vec(

window=10,

min_count=2

)

There are many more parameters you can see on the shift plus tab option.

1) Model Training

Now first we will extract the unique words present inside the corpus using the build vocab function. After that, we will train the deep learning model and we need to define and pass three important parameters.

- Corpus - the complete data to train the model.

- A total number of sentences - Corpus length function gives us a total number of sentences present inside the corpus that we need to define to model.

- Epochs - How many times model will iterate on data during training.

model.build_vocab(story)

model.train(story, total_examples=model.corpus_count, epochs=model.epochs)

It will take a little bit of time to run because the data is huge and after that, we have our trained word to vector model and get access to all the functions to see the results.

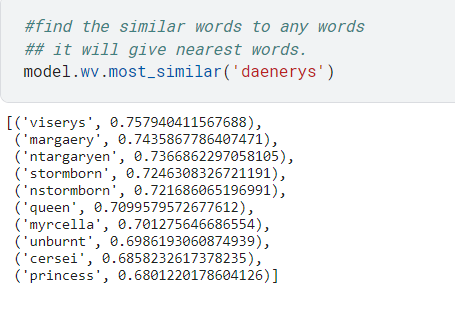

2) Find Most Similar Words in the Corpus to Any Words

We can find the list of similar words that show the semantic difference to any word. Now if you have seen or read game of thrones then you might know the names of the characters and one was Daenerys so let us find all similar words.

model.wv.most_similar('daenerys')

3) Find words that stand out in the corpus

We can define a list of words and search which word is different from all other words in a list. And the model is very beautifully trained so it gives correct output as Jon and you know the reason because he is not stark and was adopted and else all are brother and sisters.

#find the odd one out from this

model.wv.doesnt_match(['jon','rikon','robb','arya','sansa','bran']

4) Vector Representation of Any Word

To extract the vector representation of any word from the corpus you can use the below the line of code.

#find the vector representation of any word

model.wv['king']

5) The Probabilistic Similarity Between Words

Most have the question to find how much the two words are similar to each other so we also have a separate function to check and it outputs the estimated probability.

model.wv.similarity('arya','sansa')

6) Visualize similarity between words in a corpus

Now we have words in 100 dimensions and now we might want to observe like visually how these words look but we cannot plot a graph because these are in 100 dimensions. So If you have studied machine learning then you must know the dimensionality reduction technique like PCA. we will use PCA and reduce from 100 to 3-dimension.

#convert to 3-d

pca = PCA(n_components=3)

X = pca.fit_transform(model.wv.get_normed_vectors())

X.shape

7) Plot the Graph to visualize the similarity between words.

Now using the Plotly graph we can plot the graph in 3 dimension space. we have many words in the corpus so I have plotted only 100 words.

#plotting 100 words

import plotly.express as px

fig = px.scatter_3d(X[200:300],x=0,y=1,z=2, color=y[200:300])

fig.show()

🔖 Conclusion!

This is how the word to vector model works. After following this article, I hope that you are capable of implementing the word to vector model and making your interviewer understand the working of the word 2 vectors. So quickly, let us have the concluding and key points we learned from this article.

- Word 2 vector and skip-gram model are word embedding technique that works under prediction-based vector representation.

- Both the models have low computation power and high efficiency compared to frequency-based models.

- To visualize the vector obtained from the word 2 vectors, we need to use dimensionality reduction techniques like PCA or TSNA.

- Gensim is a good library for word embeddings and basic text preprocessing.