Data Analysis is a process of performing a descriptive analysis of any data to understand the data better and find some kind of relationships, differences, patterns, hidden information or patterns in the data which is further used to develop a generalized machine learning model. Hello, and welcome friends to the amazing article where we will try hands-on data analysis on a very popular real dataset of Netflix.

Table of Contents

- Dataset Overview

- Basic Data Analysis

- Data Preprocessing

- Analyzing the data with different questions

- Conclusion

Dataset Overview

The dataset contains TV shows and Movies' details available on Netflix as of 2019. The dataset is collected from Flexible which is a third party Netflix search engine. In 2018 they shared an interesting report that shows that the number of TV shows on Netflix has tripled. It is one of the exciting datasets which excites more to perform different data analyses and find some more information.

Load the Data

The first task is to import necessary libraries and load the dataset in the Jupyter Notebook to start exploring the data. I hope that if you are following with me then you have downloaded the dataset and are ready to explore the dataset and have created a new Jupyter notebook or a Kaggle notebook or Google colab. use the below code to load the dataset to Jupyter.

#Import Libraries

import numpy as np #for mathematical calculations

import pandas as pd #data preprocessing

import matplotlib.pyplot as plt #data visualization

import seaborn as sns

#ignore all warnings

import warnings

warnings.filterwarnings("ignore")

- We use read_csv function of Pandas to load CSV files. It also supports different file formats with different functions like Excel, JSON, SQL queries from databases, etc.

- To observe the top 5 rows of data we use the Head function, and to observe the bottom 5 records we use a Tail function. You can also define a custom number to observe any desired number of rows from the top to the bottom.

Basic Data Analysis

While working on any problem statement where you have a dataset so the first thing that comes in is to ask some basic questions about data to understand the upper structure of the dataset.

1. What is the shape and size of the data?

The shape of any structured (tabular) data defines the number of rows and columns in a dataset. While size defines the number of elements present in the dataset. If you run the below commands then we have 7789 rows and 11 columns in the dataset. The total elements in the dataset are 85679.

data.shape #view shape(rows, columns)

data.size #total number of elements

2. What are the columns you have in the dataset?

It is also important to know what columns we have in a dataset or to know are names of different columns. Sometimes the name also contains some missing characters, spaces, etc.

data.columns3. How many data types columns do you have and how many columns are of a particular data type?

Observe that the columns that you have are of which data type and how many columns you have of each data type. It is important to observe when you work on higher dimension datasets where you have t separately perform the numerical and categorical variable analysis.

4. Is there a Null value present in the dataset?

Null values are known as missing values or the empty field in a dataset that is denoted by NULL. It is one of the major steps of data preprocessing where you have to deal with missing values.

5. Check whether Duplicate values are present in the dataset or not?

Duplicate records create confusions and complexity that need to be checked and treated.

Exploratory Data Analysis

Asking the basic questions about the dataset has given an overview of the dataset. Now we will dive deep into analysing the dataset using different methods including data visualization. Exploratory data analysis involves many stages for data preparation starting with data preprocessing, data visualization, feature engineering, etc. As per the information, we gather using the above questions, we will move forward taking the conclusion of the previous questions.

🔰 Task - 1) Delete the duplicate records from the dataset

Above we have observed that we have 2 duplicate records in the dataset. Duplicate records create some complexity and repetition in the outcomes so better to remove them.

data.drop_duplicates(inplace=True)Pandas provide a drop duplicates function using which we can delete the duplicate records and keep them only one time in a dataset. To permanent delete the records we need to define a parameter in place as True. And after deleting when you will check the shape of the data then we have 7787 which means 2 records are deleted successfully.

🔰 Task - 2) Show all the null values in the dataset using Heatmap?

Heatmap is a simple visualization plot that helps us to visualize the density of the data values in a graph. In this, the values are depicted by colour. matplot library does not have an inbuilt function for plotting heatmap but the seaborn visualization library supports heatmap. So use the below code and observe the plotted graph which gives the idea about missing values in each column.

👉 This type of visualization is used because you cannot present the numerical in front of a client and to give a better idea of data to the team, client, a manager we use such visualization plots.

Data Analysis Questions?

In this part, we will analyze the data and retrieve the rows based on certain given parameters and build a hypothesis and try to find out the answer to questions from the data. So let's try to find the answer to each question and learn how the data analysis process works.

🔰 Que-1) For the 'House of cards' show, Display the show ID and who is the director of the show?

Pandas dataframe supports two functions to check a particular value in any column and display the output row that satisfies the condition. So we can use 'isin' and 'contains' functions to check any value present in the list of values.

i) using the isin function

isin function checks for the value in a list of values and the search item should also be passed in the list. And you can also print the boolean values as well as rows and to find the particular column value we can mention the name of a column at the end. All the three syntaxes are shown below. try all the three to get a better idea.

data['Title'].isin(['House of Cards']) # It displays the boolean value of row index

data[data['Title'].isin(['House of Cards'])] # display complete row

data[data['Title'].isin(['House of Cards'])]['Director'] # to display specific value

ii) using contains a function

Contains a function to search for a particular string in a list of strings. so first we need to convert the values to strings and search the value in a list of strings.

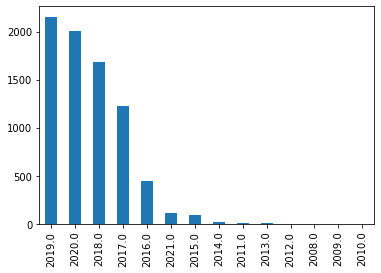

🔰 Que-2) In which Year Highest number of TV shows and Movies was released? Display the results with the help of a Bar Graph.

We can understand that the question is related to dates so the first task is to convert the given release date column data type from object (string) to datetime. After that, we have attributes in python datetime to access day, month, year, weekday, etc.

value counts function - It results in the count of each value present in the dataset.

On each year we can use the value counts function to get the counts and plot the graph using the panda's plot function.

#First thing is to convert the date to Datetime data type

data['Release_Date'] = pd.to_datetime(data['Release_Date'])

# show the results using Bar graph

data['Release_Date'].dt.year.value_counts().plot(kind="bar")

plt.show()

🔰 Que-3) With the help of a Bar graph display the total number of Movies and TV Shows present in a dataset?

I hope you might get the answer that we can use the above query to answer the question so you are absolutely right. To solve one question there are multiple ways. And you can definitely use value counts functions on the Category column.

Alternative of Value Counts

We can use group by function also in this case with aggregate functions. Group by function group the data based on a particular value and after that, we can apply any aggregate function like count which will result in the total number of records of that particular value.

#data['Category'].value_counts()

## ALTERNATE WAY

data.groupby('Category').Category.count()

Alternative of Bar Graph

The seaborn library has a supported count plot graph method which plots a bar graph according to the count of each value in data.

🔰 Que-4) Display the records of all the movies that were released in the year 2013?

This is an interesting question where you have to filter the data based on two conditions and display the resultant dataframe. We know that to display the dataframe we need to mention the conditions in square brackets like data[conditions].

😏 How to filter data based on multiple conditions?

To filter the data we can mention each condition in parenthesis under a square bracket separated with bitwise operators. In question, we want records of all Movies and the year should be 2013 so we need to use the 'AND' bitwise operator. First I will add a new column as a year to a dataset and then follow the condition.

To make a new column you can directly create it with a square bracket followed by an assignment operator which contains the types and values you want to insert.

# Filtering based on 2 condition

data[(data['year'] == 2013) & (data['Category']=="Movie")]

🔰 Que-5) Show the titles of all TV Shows that were released in India only?

The question is exactly the same as the above question in which you have to filter the data based on two conditions and only print the title that you will mention at the end in square brackets.

data[(data['Category'] == 'TV Show') & (data['Country'] == 'India')]['Title']🔰 Que-6) Display the name of the top 10 directors who have published the highest number of TV shows and movies on Netflix?

Value counts function is a very essential function because you can use a different function on its output. In this case, you can simply use the value counts function on the director column and use a head function with 10 rows to display.

👉 We can also convert the output of value counts in the dataframe using the to_frame function and then use the head method with 10 rows. You can also use Group By method to find the solution to this type of problem.

🔰 Que-7) Display all the records of movies where the genre is 'Comedies' or the country of release is 'United Kingdom'?

The question is again a filter based but here three conditions are there and you have to use two bitwise operators. In this question, we have a correct row with a different combination like it is a movie but released in some other country then also the row should be displayed.

data[(data['Category'] == 'Movie') & (data['Type'] == 'Comedies') | (data['Country'] == 'United Kingdom')]

🔰 Que-8) In how many movies Tom Cruise was cast?

We have learned above that to search for a particular value in any column we can use assignment operators, isin function, and contains function. But the cast column contains a huge number of missing values. So when we use any function it will output an error and with the assignment operator, it will result in an empty row. Currently, we will drop all the Null values but when you are working on any problem statement you need to treat the Null values and especially when you have a large amount because you cannot delete them.

data_new = data.dropna()

data_new.shape

😏 To know more about missing values and their treatment and learn different methods please refer to this blog. It will teach each popular method for missing value treatment. 💥

🔰 Que-9) What are different ratings defined by Netflix?

The question can be asked in different ways along with some conditions. But here you need to print all the unique rating values given by Netflix. So to print all unique values pandas have a function as unique and to print a total number of unique values we have a unique function.

data['Rating'].unique()Let us analyze how different questions based on similar categories can be asked.

👷 Q-9.1) How many movies got a 'TV-14' rating in Canada?

The question is based on filtering the data on behalf of three conditions where Rating, category, and country column should be used.

data[(data['Category'] == 'Movie') & (data['Rating'] == 'TV-14') & (data['Country'] == 'Canada')].shape

👷 Q-9.2) How many TV shows got an R rating after the year 2018?

You can write the query for this where one is a conditional query based on a greater than condition.

data[(data['Category'] == 'TV Show') & (data['Rating'] == 'R') & (data['year'] > 2018)]🔰 Que-10) What is the maximum duration of a movie or a TV show on Netflix?

The duration column is a string type column so you cannot directly use the maximum function. If you print all unique values then you will observe that some of the value contains minute word and some contain session word at the end the first step is to get rid of this type of data.

👉 What are possible solutions for this type of case? So you can remove the word and keep only value and process the data further. One is you can split the data and form two new column that stores the value in minutes and the other is unit as minute or session. we will go with the second option in this case.

#create two new column as minutes and unit

data[['Minutes', 'Unit']] = data['Duration'].str.split(' ', expand=True)

data['Minutes'] = data['Minutes'].astype(int) #convert to numeric

#Now find the mximum value from The minutes column

print(max(data['Minutes']))

⚠️ Note

The dataset is very nice and much more questions can be framed on it. My only request is to not leave the project as it is and try and explore some more Pandas methods and implement more graphs on the dataset. I want to refer to a very good article on exploratory data analysis that will guide you in taking the further steps after this 10th question and you will learn a lot.

👉 Complete Code Notebook of the article - Kaggle

Conclusion

We have learned a lot of things about data analysis through this small article where we started with a basic understanding of data and how to get dipper and extract different relationships and patterns from data. I hope that you have learned how questions may be framed and how to approach them with help of data to figure out the solution.

😋 I hope that you enjoyed the article. If you have any doubts or suggestions then please comment down or send us through the contact form. It will help many other readers to learn something new.

Thanks for your time! 😊